Photobank kiev - Fotolia

DataCore's Caringo buy spotlights need for object storage

Object store acquisitions by DataCore, Nvidia and Quantum underscore the growing importance of object storage to address enterprise workloads ranging from archives to analytics.

DataCore Software's recent merger with Caringo threw object storage into the spotlight again -- a year after Nvidia and Quantum completed acquisitions of similar scale-out technology to store enormous volumes of data.

Although the trio of acquisitions fills differing needs for each vendor, they collectively reflect the expanding number of use cases that object storage now targets. Object stores have traditionally focused on backups and archives of cold, unstructured data. But more recently, higher performing options -- including some using flash technology -- are increasingly becoming a consideration for AI, machine learning and analytics workloads.

GPU pioneer Nvidia, for instance, uses the object storage software it bought from SwiftStack in the internal shared AI infrastructure that its data scientists and researchers rely on, according to Manuvir Das, head of enterprise computing at Nvidia. Das said the SwiftStack software also factors heavily in its partnership with automaker Daimler to build an in-vehicle computing system and AI computing infrastructure.

Before purchasing SwiftStack, Nvidia had worked with the San Francisco object storage specialist for more than 18 months on addressing the data challenges associated with running AI applications at massive scale. SwiftStack's 1space technology runs between the compute and storage servers to optimally place data.

Das said SwiftStack's 1space would serve as a connector between the heterogeneous storage systems that its enterprise customers have and enable them to reuse their storage assets for AI purposes. But Nvidia has no plans to sell the SwiftStack software as product.

"We are not a storage vendor," Das said.

DataCore, Quantum target classic object use cases

DataCore and Quantum are primarily targeting traditional object storage use cases, at least initially, in their products. Storing and protecting large unstructured data sets drove most of the growth for ActiveScale since Quantum completed its purchase of the Western Digital object storage subsidiary in March 2020, according to Eric Bassier, the company's senior director of products.

Quantum had sold and supported ActiveScale through its branded Lattus appliances for more than five years and was an original investor in Amplidata, the startup that developed ActiveScale before its 2015 sale to Western Digital. Since bringing the object store in-house, Quantum has worked to improve the performance, add features in areas such as security and resiliency, and extend ActiveScale's Dynamic Data Placement and erasure coding technology to new mediums, Bassier said.

A pioneer in hard disk drive technology, Quantum sold its HDD business in 2001 to focus on storage systems and tape libraries. The San Jose, Calif., storage vendor's portfolio now includes NVMe flash and hybrid arrays, its scale-out StorNext file system, LTO tape storage, backup appliances and video recording servers in addition to the ActiveScale object storage.

DataCore plugs object storage gap

Founded in 1998, DataCore had long sold software-defined storage before acquiring Caringo. The Fort Lauderdale, Fla., software vendor bought Caringo to fill a gap in its DataCore One vision that aims to unify the three main types of storage -- block, file and object -- under a common control plane. Caringo's Swarm multi-petabyte object store joined block-based SANsymphony virtualization and file-based vFilO as the main products in DataCore's storage portfolio.

Swarm's initial focus will be archiving and backing up files, images and medical records, and storing, managing and directly streaming media without the need of a web or application server, according to Gerardo Dada, DataCore's chief marketing officer.

"Over time, as we run this by our customers and our partners, we will get a lot more clarity about the use cases where they see this product being applicable," Dada said. He did not rule out using Swarm for workloads requiring higher performance, claiming benchmarks show Swarm is faster than other object stores. He said Caringo had some success with high-performance computing customers.

DataCore plans to recommend Swarm to customers that need to store 100 TB or more of data. Caringo's portfolio also includes virtual Drive technology to drag and drop files to Swarm, FileFly to move cold data from primary storage to Swarm, and SwarmFS to provide an NFS and SMB interface to Swarm. Dada said DataCore would keep FileFly and include SwarmFS with the core Swarm product so customers won't have to pay extra for it.

But the main focus of the DataCore One vision is unifying SANsymphony, vFilO and Swarm through DataCore Insight Services (DIS). Dada said DIS would give customers a set of data services to apply across all their storage systems and enable them to move data freely between block, file and cheaper object storage, and replicate to the public cloud for deep archiving.

Dada said vFilO, which incorporates technology from a DataCore partner, already could automatically export data to Swarm or any S3-compatible object store based on policies such as a file's age. Because vFilO is a virtualization layer, the user can view files as if they haven't moved, he said. Dada noted that SANsymphony supports auto-tiering of data based on performance requirements.

Realizing the full DataCore One vision will require integration work, but CEO Dave Zabrowski asserted the time frame would be "measured in months, not years." That may come as welcome news for customers that were looking into other object stores. Zabrowski said DataCore and Caringo had some common customers, including two cloud service providers in Europe and a hospital.

DataCore One competition

But Caringo's Swarm was hardly the most popular object storage product in the market. Dada said he expects Swarm will become more successful through DataCore's network of channel partners than it had been through Caringo's direct sales strategy. DataCore expects chief competition will come from traditional storage vendors with "siloed" products, Zabrowski said.

Marc Staimer, president of Dragon Slayer Consulting, cautioned that DataCore does not have a unified storage system supporting block, file and object through a single software stack in the way that startup StorOne does. But Staimer said having the separate SANsymphony, vFilO and Swarm products connected through a common management layer would benefit customers.

"Customers want flexibility. That's what it all comes down to -- not having to buy multiple systems, multiple training, multiple management, multiple rack space, etc.," Staimer said. "Ultimately people want to be able to limit the amount of hardware they have. That's one of the reasons they go to the cloud, where they can get block, file and object."

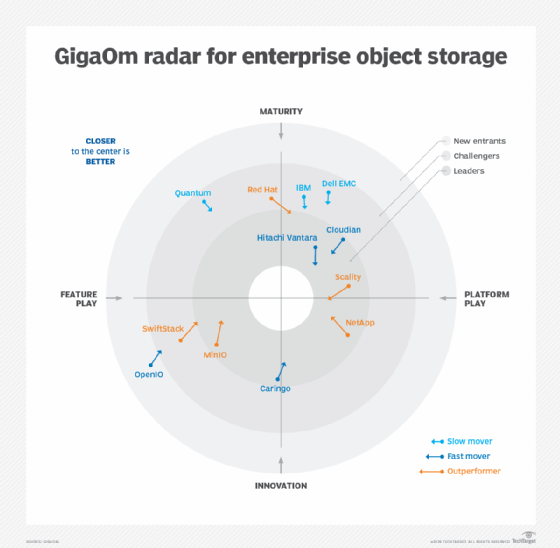

Enrico Signoretti, a research analyst at GigaOm, said all vendors are proposing multiprotocol strategies. He cited the example of NetApp adding object storage to its arrays to do block, file and object on a single system. He said Hewlett Packard Enterprise is the only major vendor lacking object storage in its product portfolio. HPE partners with Scality for object storage.

Julia Palmer, a research vice president at Gartner, said it was a great move for DataCore to expand its data services from structured data, with SANsymphony, to the unstructured world, with vFilO and now Caringo Swarm, because unstructured data is growing rapidly. But she said the competition in the unstructured data market would be tough for DataCore against big storage vendors such as Dell EMC, NetApp, IBM, Hitachi and Pure Storage.

Palmer said, although more products support multiple types of storage, Gartner observed that large enterprises still tend to seek out "best of breed" in each category rather than unified or universal storage. She said midsize and edge deployments, however, are starting to show interest in unified products. DataCore has historically focused on midmarket enterprises that often prefer to buy all their data services from a single provider, Palmer added.

"DataCore is going to tell a consolidation story. They've been very good at that in the past, and now they can add object to it," said Eric Burgener, a research vice president at IDC. "Although nobody has specifically asked me for block, file and object in the same system, they are very interested in the simplification of the data pipeline."

Economic benefit to single storage platform

Burgener said customers would gain an economic advantage if they could run, monitor and manage their block-based, latency-sensitive legacy workloads on the same platform as their new big data analytics applications. The challenge for vendors is meeting the performance requirements of the disparate workloads, he said.

"Because end users have not seen the ability to meet those performance requirements with the same platforms in the past, a lot of them have shied away from these kinds of products," Burgener said. "If DataCore can prove that it can run all the different types of access methods and I/O profiles on the same system and meet the performance requirements for all of them, that's an interesting value proposition."

Caringo's high-performance object store stands out by running the software directly out of DRAM to deliver more performance than most other object stores, Burgener noted. But he does not expect DataCore to compete against vendors such as StorOne and Vast Data that use dense QLC flash and storage class memory to accelerate performance of next-generation workloads.

Burgener expects DataCore will go up against other software-defined storage options, including IBM Spectrum Virtualize and DDN's Nexenta, etc., for general-purpose block and file storage sharing. He also expects DataCore to compete against major vendors of external SAN and NAS systems by providing much of the same storage management capabilities with a better economic value proposition and more flexibility to accommodate heterogeneous storage.