In today’s digital landscape, the challenges associated with handling data go beyond just its explosive growth. While the sheer volume of data has undoubtedly surged, it’s the underlying intricacies of how frequently data is accessed and where it is stored that are truly perplexing. Consider this: a mere 10% of all the data stored is responsible for a staggering 90% of I/O operations. This crucial 10% doesn’t remain constant. Instead, its importance morphs and shifts continuously, reflecting the evolving needs and priorities of the organization.

On the other hand, the remaining 90% of data, which is more dormant, presents its own set of challenges. Identifying this vast pool of dormant data and deciding where and how to store it efficiently is no small feat. If high-performance storage is squandered on inactive and dormant data, then organizations risk slowing down their critical operations.

With such a setting, organizations typically face the conundrum of all-or-nothing single LUN: the tug-of-war between performance and capacity.

- At one end of the rope, there’s the prospect of performance optimization. It offers unmatched speed and agility but comes at the expense of high-capacity (and thus, high-cost) requirements. Employing data tiering may mitigate some concerns, but the expense associated with storing infrequently accessed data remains undeniable.

- Pulling from the other end we have capacity optimization. By integrating deduplication and compression strategies, this route offers a more streamlined storage footprint. While this method is more economical, emphasizing cost-effective storage, it risks potential performance limitations, even when equipped with all-flash configurations.

This lack of adaptability often forces IT teams into the uncomfortable position of choosing between optimizing for performance or conserving storage space – a decision no IT team wants to make.

EXAMPLE: Let’s consider a hypervisor datastore serving 52 VMs, where two have high IO demands. Capacity optimization techniques, which save space but add latency, can’t be applied here. The high-speed needs of these two VMs make any additional delay unacceptable, forcing the entire datastore to prioritize performance over capacity savings.

Unveiling Adaptive Data Placement

Recognizing these challenges, DataCore has introduced a groundbreaking capability in the SANsymphony software-defined storage platform, starting with version 10.0 PSP17: Adaptive Data Placement. At its heart, Adaptive Data Placement marries the capabilities of auto-tiering with inline deduplication and compression. This blend ensures data isn’t merely stored; it’s stored intelligently, optimally, and in a manner that guarantees peak performance without squandering valuable storage resources.

How It Works

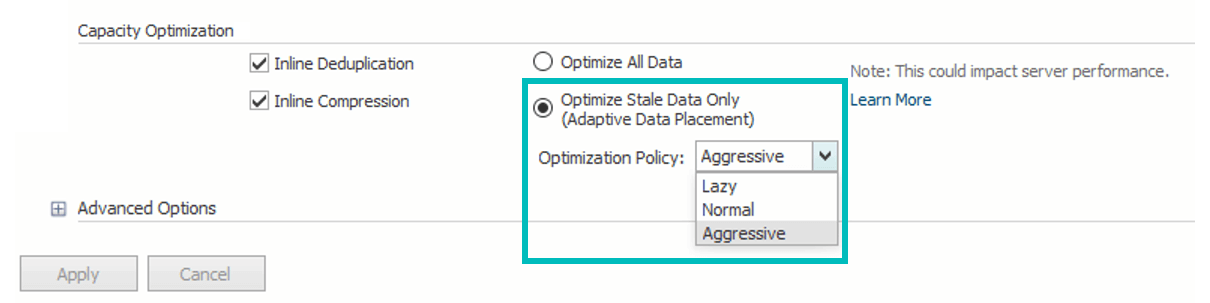

First, the admin configures the preferred capacity optimization setting choosing from among the three policies – Aggressive, Normal, Lazy. These policies delineate the targeted percentage of cold/warm data to be optimized. Admins can apply them to either inline deduplication, inline compression, or both.

Choosing from the 3 New Capacity Optimization Policies

Following this configuration, SANsymphony systematically examines data access temperatures, identifying datasets that are relatively inactive and are suitable candidates for capacity optimization.

Automatically Determining Cold Datasets for Capacity Optimization

Once identified, these datasets undergo capacity optimization utilizing the platform’s built-in settings for inline deduplication and compression. (Note: Specific datasets can be earmarked so they are excluded from capacity optimization.) This optimized data is then shifted to a distinct tier. Importantly, this tier exclusively houses cold data selected for capacity optimization, ensuring no hot data resides within.

Subsequently, SANsymphony consistently monitors data access behaviors, utilizing auto-tiering mechanisms to guarantee datasets are strategically located on the most fitting storage tiers. This dynamic reallocation of data, governed by ongoing shifts in access frequency, encompasses the real-time movement of data between the capacity-optimized tier and other tiers typically involved in auto-tiering. Through this, SANsymphony achieves a simultaneous optimization of both performance and storage capacity — a hallmark of its innovative software-defined approach.

Adaptive Data Placement: Where Auto-Tiering Meets Capacity Optimization

Reflecting on our earlier example with 52 VMs, Adaptive Data Placement alleviates VM administration efforts by auto-adjusting performance and capacity in sync with VM demands, removing the need for constant datastore rebalancing manually. The 2 IO-intensive VMs get their data placed on the fastest storage tier, while the other VMs with less active data undergo capacity optimization.

The result? You get the best of both worlds:

- The best possible performance for hot data: faster responsiveness for applications

- The lowest possible footprint for cold data: significant cost and capacity savings

Key Highlights

- Apply Without Downtime: Executes during normal operations, ensuring that there’s no disruption in your processes.

- Transparent to Apps: All processes occur behind the scenes, making it entirely transparent to your applications.

- Fully Automatic: There’s no need for constant monitoring or manual adjustments. It operates autonomously, optimizing data placement based on real-time needs.

- Turn On/Off Any Time: Can be enabled or disabled as per the IT requirements.

- Operates in Real-Time: Functions in real-time, ensuring that data is always placed optimally based on current access patterns and needs.

- Dynamic: Adjusts to the ebb and flow of data access requirements, ensuring optimal data placement all the time.

Experience the Power of Adaptive Data Placement

Adaptive Data Placement, an integral capability of the SANsymphony software-defined storage platform, represents DataCore’s commitment to enabling intelligent, optimal, and dynamic storage management. It’s not just about storing your data; it’s about storing it right. With real-time adjustments, optimal data placement, and seamless capacity optimization, SANsymphony empowers organizations to achieve both performance and capacity objectives together on the same storage volume (LUN/vDisk).

Embrace the future of data governance and give your IT team the tools they need to excel. Contact DataCore today and test-drive it in your environment.